Your podcast discovery platform

Curious minds select the most fascinating podcasts from around the world. Discover hand-piqd audio recommendations on your favorite topics.

piqer for: Global finds Technology and society

Prague-based media development worker from Poland with a journalistic background. Previously worked on digital issues in Brussels. Piqs about digital issues, digital rights, data protection, new trends in journalism and anything else that grabs my attention.

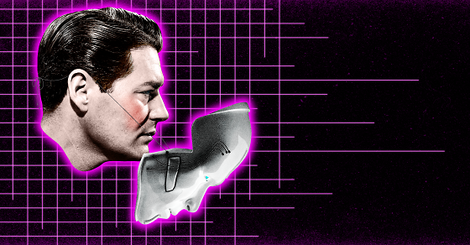

Why So-Called Objective And Unbiased Algorithms Might Be Actually Racist

In the 19th century, Viennese physician Franz Joseph Galland and his theories gave foundation to phrenology, a defunct field of study, once considered a science, in which conformation of the skull was believed to be indicative of mental faculties and personality. It was used, for example, to justify slavery (remember Django Unchained?).

Now, according to Vocativ, we're faced with a "computer-aided rehash of phrenology, with computer vision and learning algorithms standing in for cranial measurement tools".

Existing face recognition programs and their algorithms can predict whether someone is a “pedophile”, a “terrorist”, or how likely they are to commit a crime. All this based merely on their facial features.

As a result, as Vocativ points out, "those who are already disproportionately targeted by the criminal justice system (...) are again disproportionately branded by the algorithm". In other words, "rather than removing human biases, the algorithm creates a feedback loop that reflects and amplifies them".

Authorities see machine learning systems as useful tools for maintaining homeland security, steadily increasing the use of the facial recognition systems. But the algorithms can also be dangerous. It particular they can be "the perfect weapon for authoritarian leaders because it lets them efficiently and opaquely enforce systems that are already biased against oppositional and marginalized groups".

This comprehensive yet easy-to-read article investigates human impacts of big data and seeks ways to make the algorithms more ethical. It's not just my recommendation — Vocative journalists use data-mining technology to find story leads and angles, so let's assume they know what they're doing.

Stay up to date – with a newsletter from your channel on Technology and society.