Your podcast discovery platform

Curious minds select the most fascinating podcasts from around the world. Discover hand-piqd audio recommendations on your favorite topics.

piqer for: Global finds Technology and society Health and Sanity

Nechama Brodie is a South African journalist and researcher. She is the author of six books, including two critically acclaimed urban histories of Johannesburg and Cape Town. She works as the head of training and research at TRI Facts, part of independent fact-checking organisation Africa Check, and is completing a PhD in data methodology and media studies at the University of the Witwatersrand.

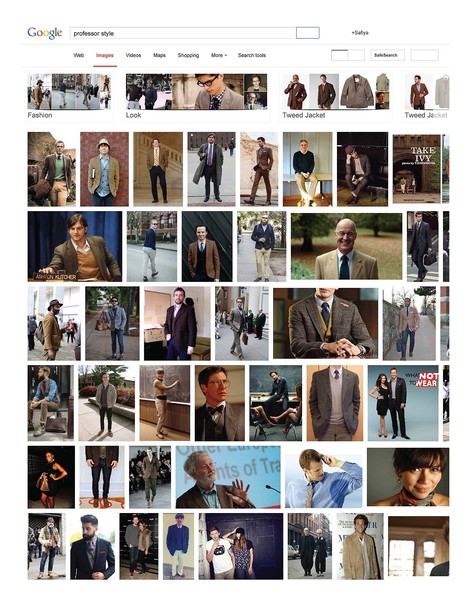

The Misogynoir Of Google

In this article by academic Safiya Noble (an extract from her book Algorithms of Oppression: How Search Engines Reinforce Racism), she starts with the seemingly innocuous question: what happens when you Google 'black girls'? If you'd asked this, as Noble did, in 2009, you would have likely been presented with a response that positioned black females as hypersexualised objects. Most of the results Noble saw were for sites containing pornographic content (which was not filtered out of Google's ad words until 2014).

From a screengrab, Noble explores what other biases were hidden (or, often, not so hidden) in seemingly innocent search terms, showing case after case after case of bias and racism and sexism, borrowed from history and old media, and deeply embedded in the new. When complaints are raised – to Google – about their search results, initially they throw up their arms and shrug, it's the algorithm, what can we do? Noble asks the key question: "If Google isn’t responsible for its algorithm, then who is?"

This article contains excellent, multi-year, real-life examples of algorithm bias, and clearly exposes it as more than 'accidental' coding but, rather, explains that the apparent thoughtlessness is a product of systemic sexism, racism and exclusion, which is not successfully being addressed because of the lack of diversity in most software companies. She also points out that, as companies like Google are able to tweak their racist/sexist glitches when they are exposed, they surely must be able to do something systemic too.