Your podcast discovery platform

Curious minds select the most fascinating podcasts from around the world. Discover hand-piqd audio recommendations on your favorite topics.

piqer for: Global finds Technology and society

Prague-based media development worker from Poland with a journalistic background. Previously worked on digital issues in Brussels. Piqs about digital issues, digital rights, data protection, new trends in journalism and anything else that grabs my attention.

The Facebook Files: World’s Largest Censor Or Merely A Bystander

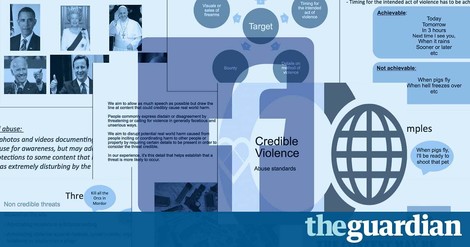

Can photos of animal abuse be shared on Facebook? Should videos of violent deaths be deleted, or merely marked as disturbing? Is sharing of child abuse imagery permitted? Are videos of abortions allowed? Can people livestream attempts to self-harm on Facebook Live? Are comments “I hope someone kills you” or "let's beat up fat kids" credible threats?

Answers to those puzzling questions, and more, can be found in a series of reports recently published by the Guardian. Based on a leak of over 100 internal training manuals, spreadsheets and flowcharts, the Guardian uncovers how Facebook determines what its users can post, and what is not allowed.The documents reveal the intricate moderation guidelines of the social media giant, who struggles with policing issues such as hate speech, racism, violence, self-harm, bullying, terrorism, sextortion, revenge-porn, and even cannibalism.

The rules are arbitrary, often inconsistent and fail to be based on logical rationale. On top of the complexity and room for potential bias, the Facebook also creaks under the sheer amount of content, forcing its moderators to make split-second decisions.

“Facebook cannot keep control of its content,” a source told the paper. “It has grown too big, too quickly.”

The free speech advocates will definitely ring the alarm bells over potential censorship. At the same time, Facebook's refusal to delete some of the gruesome and graphic videos and images might struck a cord with those who believe the company isn't doing enough to curb online violence.

It remains to be seen whether the leak will lead to any changes in the company's rulebook, but it clearly demonstrates the need for the closer scrutiny and more stringent regulation on how the social media giant controls the billions of posts created by its 2 billion users.