Your podcast discovery platform

Curious minds select the most fascinating podcasts from around the world. Discover hand-piqd audio recommendations on your favorite topics.

piqer for: Global finds Technology and society

Prague-based media development worker from Poland with a journalistic background. Previously worked on digital issues in Brussels. Piqs about digital issues, digital rights, data protection, new trends in journalism and anything else that grabs my attention.

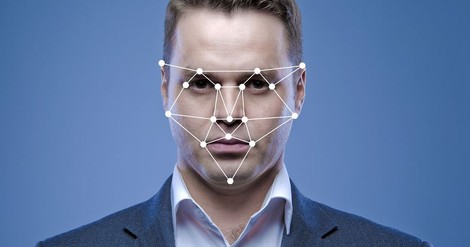

A Critical Appraisal Of AI's "Gaydar"

Researchers at Stanford University found that a computer algorithm can predict people’s sexuality using photographs of their faces. Putting images of a gay person and a straight person side by side, Michal Kosinski and Yilun Wang trained the AI to detect subtle differences in facial features. They used more than 35,000 dating website images and trained the software so well that it ended up having significantly better "gaydar" than humans.

“When presented with a pair of participants, one gay and one straight, both chosen randomly, the model could correctly distinguish between them 81% of the time for men and 74% of the time for women. The percentage rises to 91% for men and 83% for women when the software reviewed five images per person. In both cases, it far outperformed human judges, who were able to make an accurate guess only 61% of the time for men and 54% for women,” reads the Mashable article on the study first reported in the Economist.

LGBTQ rights organizations slammed the study, saying it’s “dangerous and flawed” and could “cause harm to LGBTQ people around the world.” Indeed, the research sounds suspiciously like giving ideas to governments in 72 countries, where same-sex relationships are outlawed and LGBTQ people face criminal prosecution. What if, for example, the Chechen government decided to use a similar face-recognition software in its anti-gay purge, thus increasing the number of abductions, detentions, disappearances and deaths?

Read the Mashable article to find out about other risks and drawbacks related to the study, with limitations ranging from racial bias to non-representative sample. Mashable created a piece of critical and thorough journalism, the kind that goes beyond standard news reporting and superficial commentary.